I've found VC Code copilot to be invaluable in writing small scripts and the like for, say, visualizing my DEM output in Blender. Just a super way of speeding up tool-building in scenarios where you can immediately tell if it's worked or not. With an education account, you get access to all kinds of top-end... Continue Reading →

My experience training a local LLM (AI chatbot) on local data…

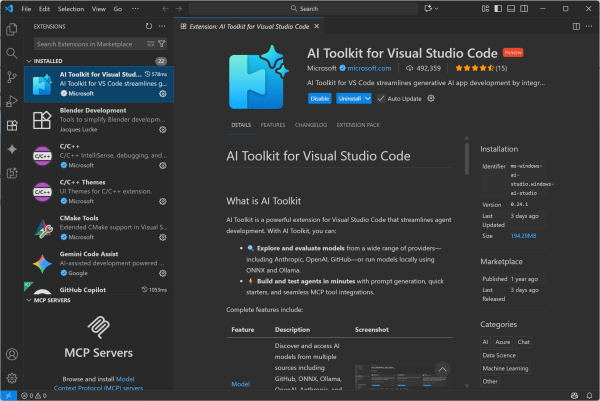

The user encountered challenges while attempting to use various methods to feed information into local Large Language Models (LLMs) via RAG (Retrieval-Augmented Generation). They explored methods such as Nvidia Chat with RTX, Ollama with Python scripts, and Ollama with Open-webui. Results varied, with some methods providing inaccurate or incomplete outputs. Comparatively, Microsoft Co-pilot, running GPT4-Turbo, significantly outperformed the local methods.