Even though I spend most of my time on mastodon baiting Linux users, I actually have been keeping a serious eye on Linux for a while. I like Windows 11 for the most part; I have it setup nicely with Windhawk, RayCast, and FilePilot such that it works very well for me. Launching and using... Continue Reading →

[Academic Tech] XREAL Air Pro 2 – Immersive Augmented Reality Glasses

At the recent SEB conference, I traveled sans laptop, working on the assumption I could do everything urgent on my OnePlus Open. I could... but what I found was that some tasks just needed a larger screen (specifically remote-ing into a remote workstation was difficult to make out everything on the small 6-7" unfolded screen).... Continue Reading →

[Academic Tech] Oneplus Open Apex Edition (after 2 months)

I moderately recently upgraded my phone from the Samsung Fold 3 to the Oneplus Open (OPO). As per usual with my tech, through a combination of selling the old gadget and picking the new one up 'as new' on ebay, I was able to keep overall costs very low. I've had the phone a couple... Continue Reading →

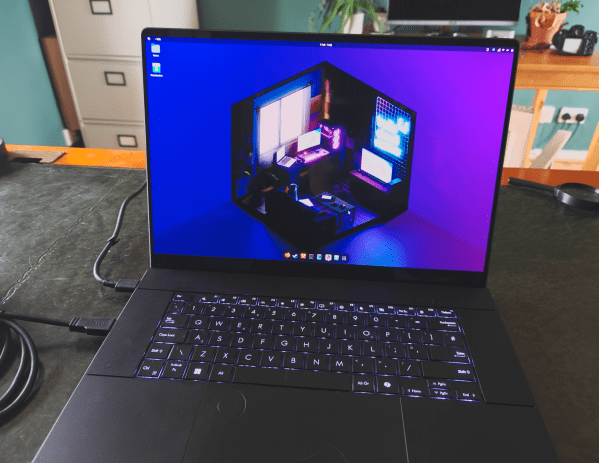

[Academic Tech] Asus Proart P16 AMD CoPilot+ Review: Incredible performance and design

Welp, it’s new computer time. My Surface Laptop Studio was starting to slow me down a bit with work, and wasn’t keeping up with games, even with the eGPU. I was also starting to feel like I was missing out on the new copilot+ PC stuff – I dabble with local LLMs and stuff, and... Continue Reading →

[Academic Tech] Philips 40″ 5K2K Ultrawide Thunderbolt monitor

A shortish review here about the Monitor I've been using at work for about 6 months now. It's the Philips 40" curved monitor: I needed a new monitor to go with the new computers my team and I got set up with, and I very specifically wanted a very high-resolution monitor for multi-tasking. I wasn't... Continue Reading →

Dabbling with Linux again. And quickly giving up… again.

I tried installing linux again. Got frustrated, went back to windows.

My experience training a local LLM (AI chatbot) on local data…

The user encountered challenges while attempting to use various methods to feed information into local Large Language Models (LLMs) via RAG (Retrieval-Augmented Generation). They explored methods such as Nvidia Chat with RTX, Ollama with Python scripts, and Ollama with Open-webui. Results varied, with some methods providing inaccurate or incomplete outputs. Comparatively, Microsoft Co-pilot, running GPT4-Turbo, significantly outperformed the local methods.

[Academic Tech] JBL Tour One M2 Noise Cancelling Headphones

*Note, I was sent these headphones for review by JBL nearly a year ago, but they've had precisely zero input into this review (obviously, given I haven't written it until now), and it doesn't affect what I'm writing at all. The only benefit I stand to gain is the amazon affiliate link I'll shove at... Continue Reading →

[Academic Tech] Surface Laptop Studio (gen 1) long term review

Back in September last year, I wrote a post about the Surface Laptop Studio I'd just bought. I'd only had it a few days at the time, and so it was very much a first impressions post. I've now had the device over a year, and with the second gen released just last month, now... Continue Reading →

Windows is getting me down

**WARNING - this is a very niche rant** I'm quite a tech nerd. I enjoy gadets, and phones, and even writing software and addons. A big part of this is that I just generally enjoy interacting with my computer's operating system to get stuff done. I've been firmly embedded in Windows most of my life,... Continue Reading →